Artificial intelligence has become a transformative force across industries, promising unprecedented efficiency, insights, and automation. However, the rapid adoption of AI technologies introduces complex challenges that organisations must address systematically. The risk with AI extends beyond simple technical failures to encompass governance gaps, ethical concerns, operational vulnerabilities, and regulatory compliance issues. For organisations managing sensitive operations, understanding and mitigating these risks is not optional; it is essential to maintaining operational integrity and stakeholder trust.

The risks of AI manifest across multiple dimensions, each requiring specialised attention and mitigation strategies. Organisations increasingly rely on AI systems for decision-making, operational support, and strategic planning, yet many lack comprehensive frameworks to assess and manage associated threats.

Modern risk management approaches categorise AI-related threats. Governance risks emerge from inadequate oversight structures, unclear accountability chains, and insufficient policy frameworks. Technical risks include algorithmic bias, model drift, adversarial attacks, and system failures that compromise reliability. Operational risks involve deployment challenges, integration issues, and dependencies that create vulnerabilities in business processes.

The NIST AI Risk Management Framework provides a comprehensive foundation for organisations seeking to incorporate trustworthiness considerations into their AI initiatives. This structured approach helps teams identify potential failure points before they escalate into critical incidents.

Recent research has documented an extensive range of AI-related threats. A comprehensive meta-review compiled 777 distinct AI risks from various taxonomies, providing organisations with a common reference framework for risk identification and assessment.

Key vulnerability categories include:

The complexity of these vulnerabilities requires organisations to adopt multi-layered defences rather than relying on single-point solutions. Alma Risk's approach emphasises intelligence-led assessment that considers both immediate threats and emerging risk patterns.

Addressing AI-related risks demands robust technical safeguards implemented throughout the system lifecycle. Organisations must embed security and reliability measures from initial design through deployment and ongoing operations.

Modern frameworks for AI risk detection employ systematic methodologies that go beyond ad-hoc testing. The AURA framework introduces mechanisms for detecting, quantifying, and mitigating risks associated with autonomous AI agents, emphasising human-in-the-loop oversight as a critical control mechanism.

Organisations should establish continuous monitoring systems that track model performance, input distributions, and output patterns. Deviations from expected behaviour often signal emerging risks that require immediate investigation.

The risk with AI escalates when systems lack redundancy and fail-safe mechanisms. Resilient architectures incorporate multiple layers of protection, ensuring that single-point failures do not cascade into catastrophic outcomes.

Essential architectural elements include:

Technical safeguards alone cannot eliminate AI-related risks. Organisations must complement these measures with governance structures and operational processes that ensure responsible deployment and use.

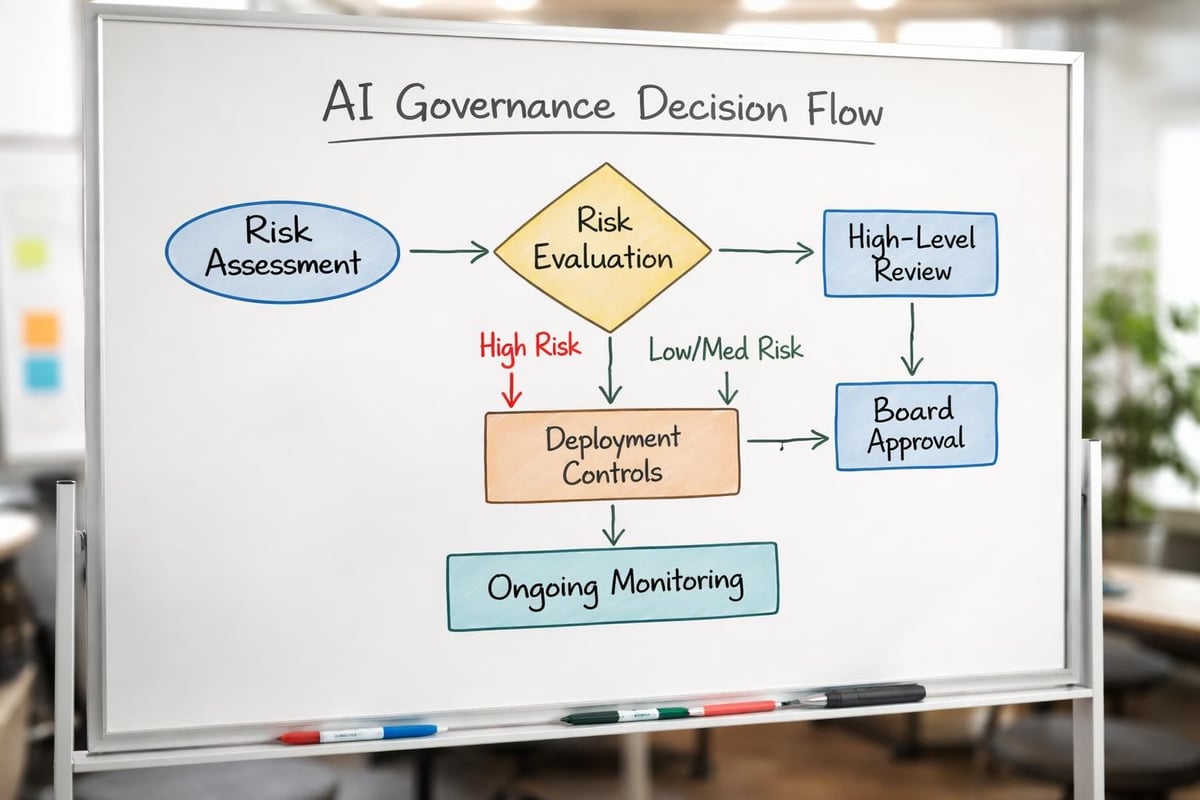

Effective AI risk management requires governance structures that establish clear accountability, define acceptable use parameters, and establish oversight mechanisms. Without robust governance, even technically sound systems can generate unacceptable outcomes.

The AI Hazard Management framework provides a structured process for systematically identifying, assessing, and treating AI hazards throughout the system lifecycle. This approach ensures auditability and enables organisations to demonstrate due diligence to regulators and stakeholders.

Governance structures should address several critical questions: Who owns responsibility for AI system outcomes? What processes govern model deployment decisions? How are risks escalated and addressed? What documentation standards apply to AI initiatives?

Organisations operating in high-risk environments understand that governance cannot exist in isolation. Security and risk management capabilities must integrate AI-specific considerations into existing frameworks rather than treating them as separate concerns.

The regulatory landscape surrounding AI continues to evolve rapidly. Global AI regulation frameworks vary significantly across jurisdictions, creating compliance challenges for international operations.

Effective AI policies should cover:

In the United States, AI regulation currently relies heavily on voluntary commitments and sector-specific rules rather than comprehensive federal legislation. Organisations must monitor regulatory developments and adapt policies accordingly.

The risk with AI extends beyond design and governance into daily operational practices. How organisations deploy, monitor, and maintain AI systems significantly impacts overall risk exposure.

AI systems rarely operate in isolation. They integrate with existing infrastructure, data pipelines, and business processes, creating complex dependency chains. When AI components fail or behave unexpectedly, the impacts ripple through connected systems.

Organisations must explicitly map these dependencies, identifying critical paths where AI failures would disrupt essential operations. This mapping enables prioritised monitoring and targeted contingency planning.

Research on AI risk sources emphasises factors that influence reliability and robustness, including data quality issues, limitations in model interpretability, and environmental sensitivity.

Continuous monitoring represents a fundamental control for managing the risk with AI. Effective monitoring systems track multiple indicators simultaneously, creating early warning capabilities before minor issues escalate.

When incidents occur, organisations need pre-defined response protocols that specify roles, escalation paths, and remediation procedures. The risks of AI include reputational damage from inadequate incident handling, making response planning essential.

Technology alone cannot mitigate AI-related risks. Human factors, including training, awareness, and organisational culture, play equally critical roles in effectively managing AI-related threats.

Personnel across organisational levels require AI literacy appropriate to their roles. Technical teams need deep expertise in AI security, fairness testing, and reliability engineering. Business leaders need sufficient understanding to make informed decisions about AI deployment and risk acceptance. End users need awareness of AI system limitations and appropriate escalation procedures.

Training programs should address both technical competencies and judgment skills required for responsible AI use. Organisations operating in complex environments recognise that technology amplifies human capabilities but also magnifies human errors.

The risk with AI diminishes when organisations cultivate cultures that encourage questioning, transparency, and accountability. Risk-aware cultures normalise raising concerns about AI behaviour, reward early problem identification, and support thoughtful consideration of deployment decisions rather than rushing to implementation.

Cultural elements supporting AI risk management include:

Organisations should foster psychological safety in AI risk discussions, ensuring concerns are given serious consideration rather than dismissed.

Many organisations rely on AI systems developed by external vendors rather than building proprietary solutions. This dependency introduces supply chain risks that require specific management approaches.

Evaluating third-party AI providers demands rigorous assessment of their development practices, security controls, and risk management capabilities. Organisations should request documentation of testing procedures, fairness audits, and security assessments before adopting external AI solutions.

Guidance on AI risk management emphasises the importance of contractual provisions addressing liability, performance guarantees, and audit rights. Contracts should specify acceptable performance parameters and remediation requirements when systems fail to meet standards.

The risks of AI supplied by third parties include reduced visibility into system behaviour and limited ability to modify problematic components. Organisations must weigh these constraints against the efficiency gains from commercial solutions.

When AI systems depend on external models or data sources, organisations inherit risks associated with those dependencies. Model providers may change algorithms, deprecate services, or suffer security breaches that compromise dependent systems.

Risk mitigation strategies include:

The risks of AI continue to evolve as technologies advance and adoption deepens. Organisations must anticipate emerging threat patterns and adapt risk management approaches accordingly.

As AI systems gain autonomy, maintaining effective human oversight becomes increasingly challenging. Autonomous agents may make decisions faster than humans can review, operate in environments where intervention is impractical, or pursue objectives in unexpected ways.

The preliminary AI Risk Mitigation Taxonomy organises mitigations into categories, including governance, technical safeguards, operational processes, and transparency measures, providing structured approaches for managing autonomous system risks.

Organisations deploying autonomous AI must define clear operational boundaries, implement robust monitoring, and maintain override capabilities that enable human intervention when necessary.

The risk with AI becomes particularly acute in high-stakes applications where failures threaten lives, security, or critical infrastructure. Organisations operating in such contexts require enhanced safeguards and more conservative deployment approaches.

For organisations providing protective services or operating in challenging environments, AI tools offer valuable capabilities for threat detection, route optimisation, and situational awareness. However, these applications demand exceptional reliability and human oversight to prevent catastrophic misjudgments.

Regulatory frameworks governing AI continue to develop globally, with different jurisdictions adopting varying approaches. Organisations must track regulatory changes and adapt compliance programs to meet evolving requirements.

Trustworthy AI research from NIST outlines key characteristics, including fairness, explainability, security, and privacy, that increasingly inform regulatory expectations. Organisations should embed these principles into AI development and deployment practices before mandates are issued.

The risk posed by AI should not be treated as a separate concern but should be integrated into enterprise-wide risk management frameworks. Organisations with mature risk management practices can extend existing processes to cover AI-specific threats.

Effective AI risk assessment requires input from multiple perspectives. Technical teams understand implementation vulnerabilities, business units recognise operational impacts, legal teams identify compliance concerns, and security specialists evaluate threat vectors.

Organisations should establish cross-functional review processes for AI initiatives, ensuring comprehensive risk consideration before deployment decisions. Risk consultancy approaches that incorporate intelligence-led assessment can reveal non-obvious threat patterns and interdependencies.

Organisations must define risk appetite for AI deployments, specifying acceptable levels of uncertainty, potential impact thresholds, and circumstances requiring executive approval. Clear acceptance criteria enable consistent decision-making and prevent ad-hoc judgments that may overlook critical risks.

Risk appetite should reflect the organisational context, including the industry sector, operational environment, and stakeholder expectations. Organisations in regulated industries or high-consequence environments typically maintain lower risk tolerance for AI deployments.

Managing the risk with AI requires ongoing learning and adaptation. Organisations should capture lessons from incidents, near-misses, and successful mitigations, and incorporate these insights into updated practices.

Post-incident reviews should examine not only technical failures but also process gaps, communication breakdowns, and cultural factors that contributed to problems. This comprehensive analysis enables meaningful improvement rather than superficial fixes.

Managing the risk with AI demands structured frameworks, technical safeguards, robust governance, and organisational commitment to responsible deployment. As AI systems become more capable and autonomous, the imperative for comprehensive risk management intensifies. Organisations cannot afford reactive approaches that address threats only after they materialise. Whether you're navigating regulatory compliance, protecting critical operations, or deploying AI in challenging environments, Alma Risk provides intelligence-led risk management solutions that help organisations anticipate and mitigate AI-related threats before they compromise operations or stakeholder trust.